Apple Acquires Q.ai: A Silent Revolution in AI Interaction Through Facial Micromovements

Apple's acquisition of Israeli AI startup Q.ai for up to $2 billion signals a major shift towards face-controlled AI. This technology interprets silent speech via subtle facial movements, impacting wearables, spatial computing, and privacy.

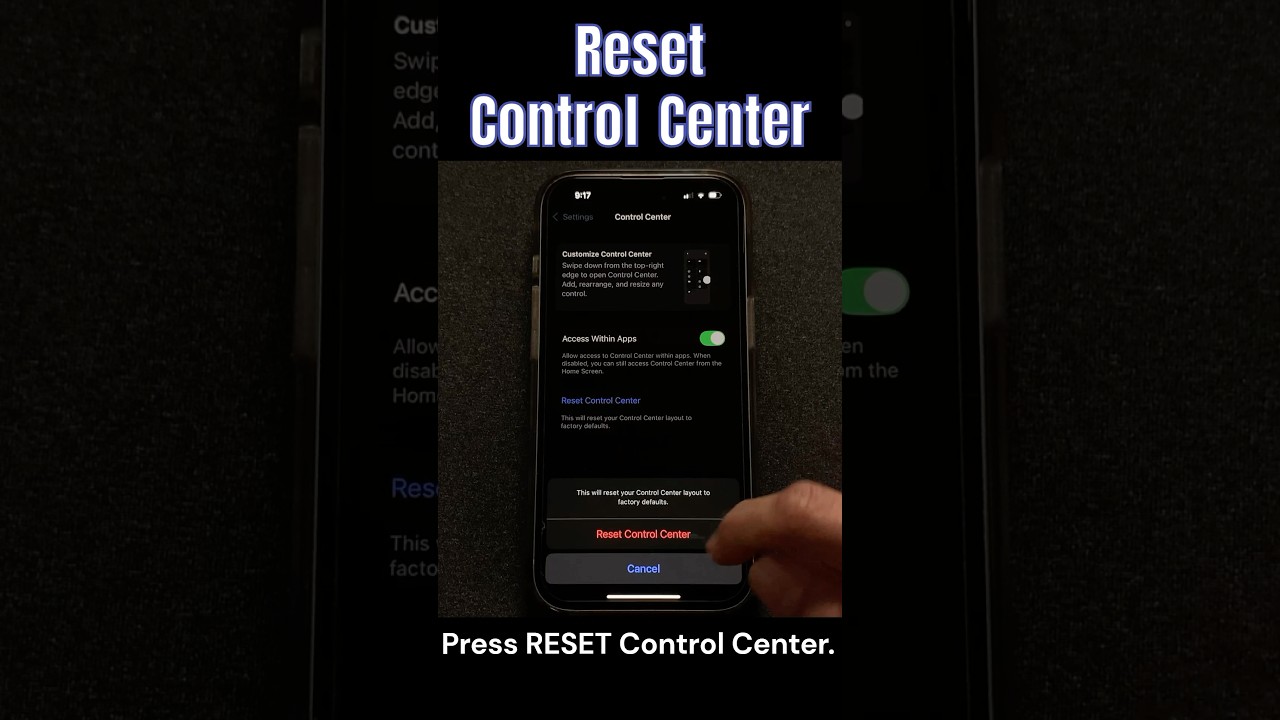

Reset Control Center on iPhone!

iPhone 14 with Apple Intelligence #apple #iphone @Apple #ai #siri

Apple intelligence in iPhone 14 Pro how to activate Apple intelligence 🥵😱📲

In a move poised to fundamentally reshape human-device interaction, Apple has acquired Israeli artificial intelligence startup Q.ai. This significant acquisition, valued between $1.6 and $2 billion, represents Apple's largest since its 2014 purchase of Beats. The core of Q.ai's groundbreaking technology lies in its ability to interpret whispered and silent speech by meticulously analyzing subtle facial micromovements, indicating a future where interaction with AI may transcend traditional voice and touch commands. This strategic investment underscores Apple's commitment to pioneering innovative interfaces amid intense competition in the conversational AI landscape.

The Dawn of Facial Interfaces

At the heart of the Q.ai acquisition is a sophisticated technology capable of detecting facial skin micromovements associated with speech. Even in the absence of audible sound, the muscles involved in forming words continue to move in predictable patterns. Q.ai's system leverages a combination of imaging, advanced audio processing, and machine learning to map these subtle movements to specific words and user intent. This capability extends beyond conventional lip-reading, which primarily relies on visible mouth shapes. Instead, Q.ai's systems are designed to capture more nuanced cues across the face that may be imperceptible to the human eye, enabling devices to infer commands even when speech is whispered or entirely silent.

For users, the implications are profound. Such an interface could facilitate more discreet and socially acceptable interactions with digital assistants, particularly in environments where speaking aloud is impractical or disruptive. Imagine conducting a sensitive inquiry in a busy office, controlling devices during a meeting, or accessing information in a quiet healthcare setting – all without uttering a sound. This innovation is a clear indication that Apple is betting on novel ways for users to engage with AI, moving beyond established voice and touch paradigms, as detailed by UC Today.

A Cornerstone for Wearables and Spatial Computing

The potential integration of Q.ai's technology into Apple's burgeoning ecosystem, especially in the realm of wearables and spatial computing, is particularly compelling. Apple has already positioned its Vision Pro as a significant leap into spatial computing and is widely anticipated to develop lighter, more everyday smart glasses in the future. In these form factors, sole reliance on voice control presents both technical and social limitations. Silent speech and facial intent detection could emerge as a critical control layer for head-worn devices, empowering users to interact with digital overlays, virtual assistants, and collaborative tools without disruptive verbal commands.

For enterprise applications, this technology could unlock hands-free access to information, streamline task management, and provide real-time guidance in environments where noise, privacy concerns, or safety protocols hinder traditional voice interaction. Within Unified Communications (UC) scenarios, silent controls could enable meeting participants to trigger actions, retrieve essential information, or manage meeting settings without interrupting ongoing discussions. This could fundamentally reshape how AI is integrated into daily workplace workflows, fostering a more seamless and less obtrusive digital experience, according to UC Today.

Navigating Privacy and Biometric Frontiers

Beyond silent speech interpretation, Q.ai's patents hint at broader capabilities, including the assessment of emotional states and physiological indicators like heart rate and respiration through facial analysis. While Apple has not yet outlined plans to deploy these advanced features, their existence suggests a future where AI systems could become more context-aware and responsive to a user's emotional and physical state. Theoretically, this could lead to more adaptive and empathetic digital assistants, capable of adjusting their tone, urgency, or recommendations based on detected stress or fatigue. In workplace settings, such features might be framed as part of wellness, accessibility, or safety initiatives.

However, these advanced capabilities inevitably raise significant privacy and governance concerns. Facial and physiological analysis involves highly sensitive biometric data. In enterprise environments, there is a distinct risk that such technology could be perceived as employee monitoring, even if deployed with seemingly benign intentions. Critical considerations regarding consent, transparency, and regulatory compliance will be paramount, particularly in regions with stringent data protection and workplace surveillance laws. While Apple's long-standing emphasis on privacy and on-device processing may help alleviate some concerns, the challenge will extend beyond technical safeguards to encompass public perception and trust. As AI systems become more intimately intertwined with the human body and face, user acceptance will be a pivotal factor in adoption.

A Platform-Level Bet on the Next Interface

This strategic acquisition by Apple bears a striking resemblance to previous foundational moves. For instance, the company's 2013 acquisition of PrimeSense laid the groundwork for Face ID, which evolved from advanced sensing technology into a ubiquitous interface across Apple devices. Notably, Q.ai’s CEO also founded PrimeSense, reinforcing the expectation that this new technology could follow a similar trajectory. If this pattern holds, silent speech and facial intent detection may initially emerge as niche or advanced features before gradually becoming mainstream interaction methods, eventually sitting alongside touch, voice, and gesture as core ways of controlling devices.

For Apple, this acquisition represents a long-term, platform-level bet on owning the interface layer in an increasingly competitive AI market. Rather than solely contending on the performance of AI models, Apple is strategically positioning itself around how naturally, discreetly, and contextually users can interact with intelligent systems. For the broader UC market, the long-term implications are substantial. Silent commands, facial-based controls, and emotion-aware systems could fundamentally alter how employees engage with meetings, digital assistants, and shared workspaces, redefining the concepts of hands-free and voice-enabled interactions. Ultimately, Apple is not merely acquiring an AI company; it is investing in a paradigm shift for human-machine communication—one that relies less on vocalization and more on subtle movement, nuanced intent, and contextual understanding, as highlighted by UC Today.

Related Articles

AI Innovator Tractian Triples Atlanta HQ, Moves to Midtown's Coda at Tech Square

One of the country's fastest-growing AI startups, Tractian, is significantly expanding its Atlanta presence by moving its headquarters to Coda at Tech Square, tripling its office footprint.